Discovering Knowledge Deficiencies of Language Models on Massive Knowledge Base

Abstract

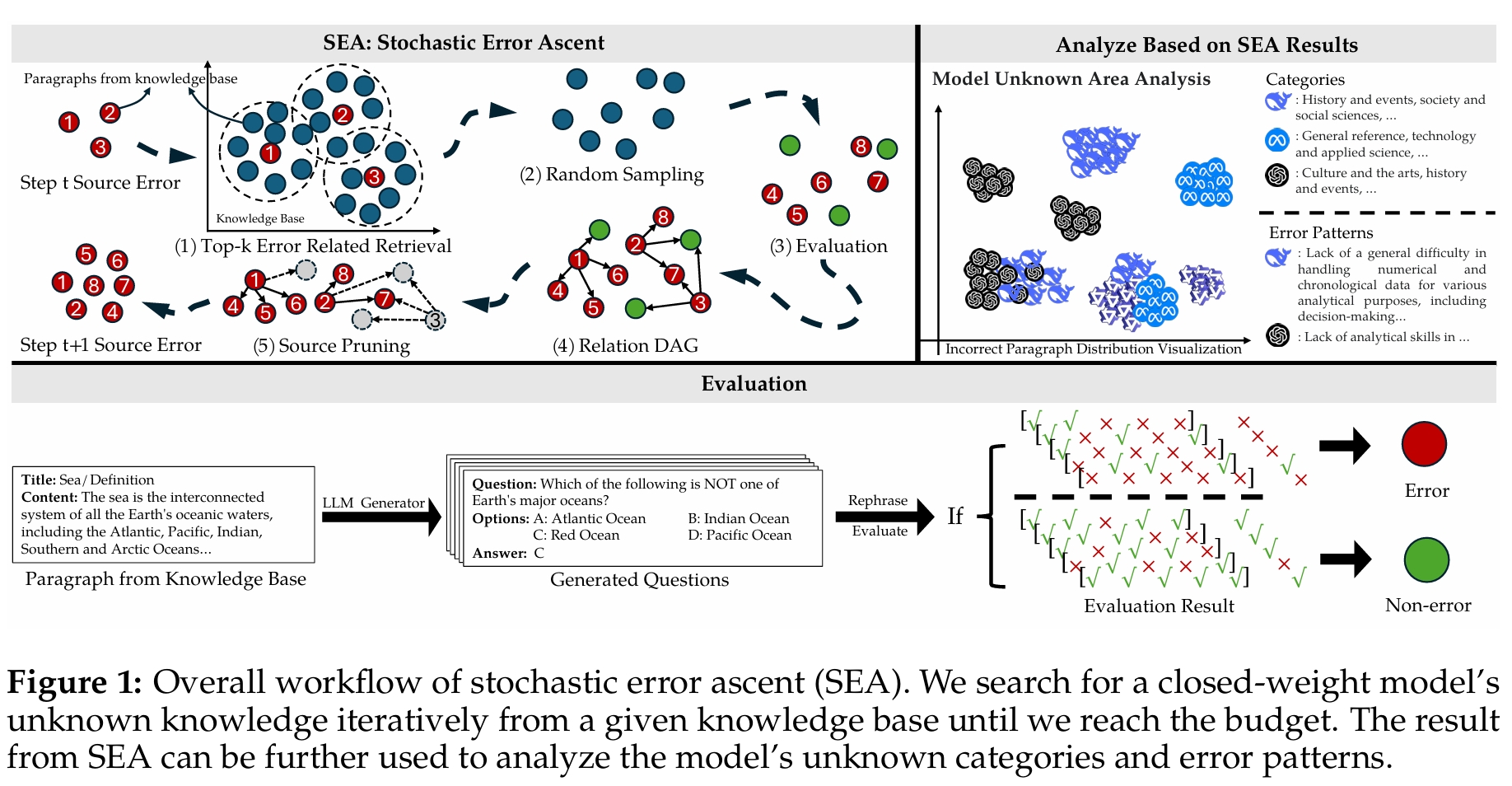

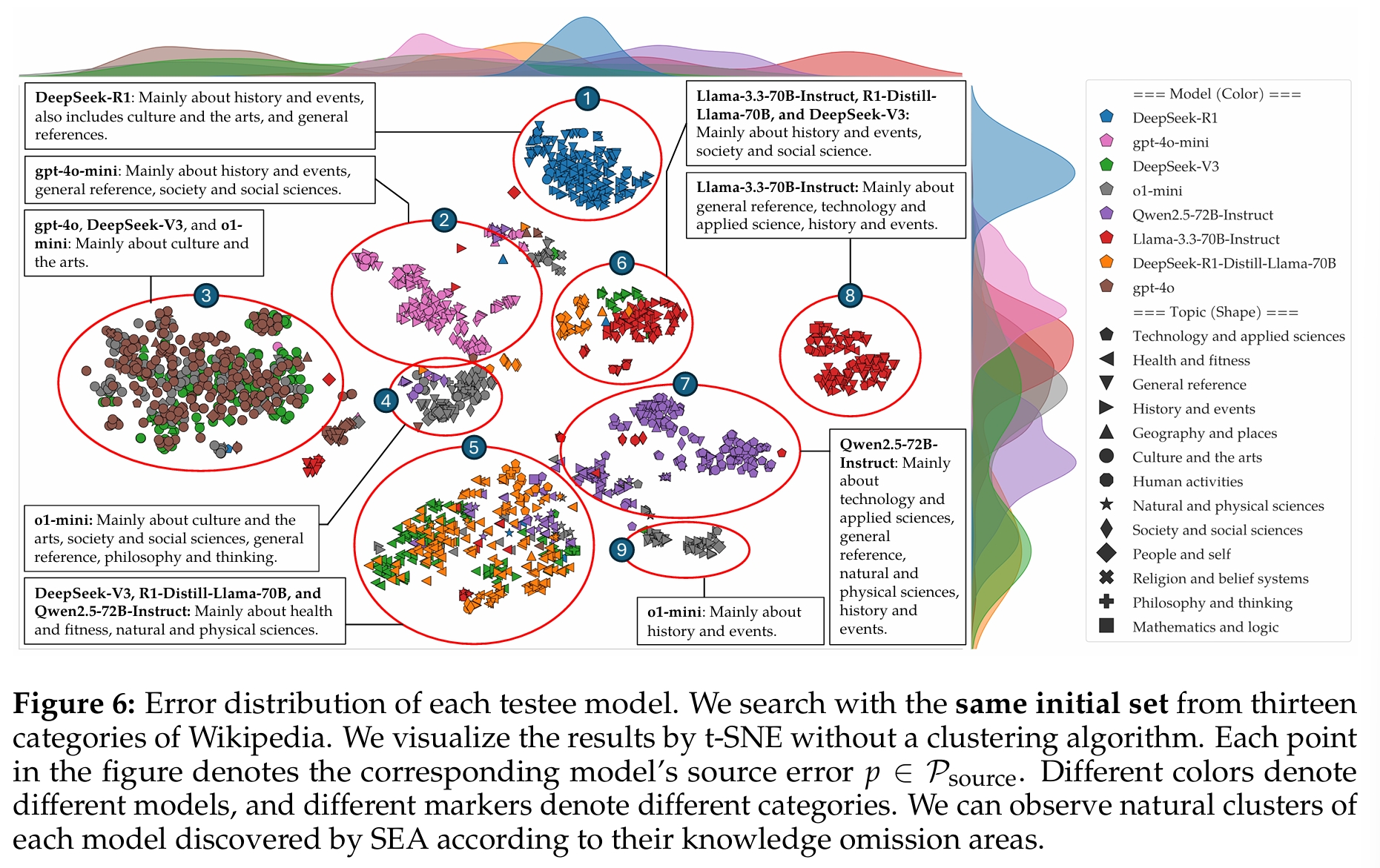

Large language models (LLMs) possess impressive linguistic capabilities but often fail to faithfully retain factual knowledge, leading to hallucinations and unreliable outputs. Understanding LLMs’ knowledge deficiencies by exhaustively evaluating against full-scale knowledge bases is computationally prohibitive, especially for closed-weight models. We propose stochastic error ascent (SEA), a scalable and efficient framework for discovering knowledge deficiencies (errors) in closed-weight LLMs under a strict query budget. Rather than naively probing all knowledge candidates, SEA formulates error discovery as a stochastic optimization process: it iteratively retrieves new high-error candidates by leveraging the semantic similarity to previously observed failures. To further enhance search efficiency and coverage, SEA employs hierarchical retrieval across document and paragraph levels, and constructs a relation directed acyclic graph to model error propagation and identify systematic failure modes. Empirically, SEA uncovers 40.7x more knowledge errors than Automated Capability Discovery and 26.7% more than AutoBencher, while reducing the cost-per-error by 599x and 9x, respectively. Human evaluation confirms the high quality of generated questions, while ablation and convergence analyses validate the contribution of each component in SEA. Further analysis on the discovered errors reveals correlated failure patterns across LLM families and recurring deficits, highlighting the need for better data coverage and targeted fine-tuning in future LLM development.

Key Contributions

- Stochastic Error Ascent (SEA): A novel framework that efficiently identifies knowledge deficiencies in LLMs by modeling error discovery as a stochastic optimization process.

- Hierarchical Retrieval: Utilizes both document and paragraph-level retrieval to enhance search efficiency and coverage.

- Relation DAG Construction: Builds a directed acyclic graph to model error propagation and uncover systematic failure patterns.

- Empirical Validation: SEA uncovers 40.7× more knowledge errors than Automated Capability Discovery and 26.7% more than AutoBencher, with significant reductions in cost per error.

SEA Framework Overview

Experimental Results

- Error Discovery Efficiency:

- SEA uncovers 40.7× more knowledge errors than Automated Capability Discovery.

- SEA identifies 26.7% more errors than AutoBencher.

- Cost Reduction:

- Achieves a 599× reduction in cost per error compared to Automated Capability Discovery.

- Offers a 9× cost reduction compared to AutoBencher.

- Human Evaluation: Confirms the high quality of questions generated by SEA.

- Ablation Studies: Validate the contribution of each component within the SEA framework.

Conclusion

SEA presents a scalable and efficient approach to uncovering knowledge deficiencies in LLMs, particularly under constraints of limited query budgets. By leveraging semantic similarities and modeling error propagation, SEA significantly enhances the discovery of knowledge errors, paving the way for more reliable and accurate language models.

BibTeX

@misc{song2025discoveringknowledgedeficiencieslanguage,

title={Discovering Knowledge Deficiencies of Language Models on Massive Knowledge Base},

author={Linxin Song and Xuwei Ding and Jieyu Zhang and Taiwei Shi and Ryotaro Shimizu and Rahul Gupta and Yang Liu and Jian Kang and Jieyu Zhao},

year={2025},

eprint={2503.23361},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2503.23361},

}