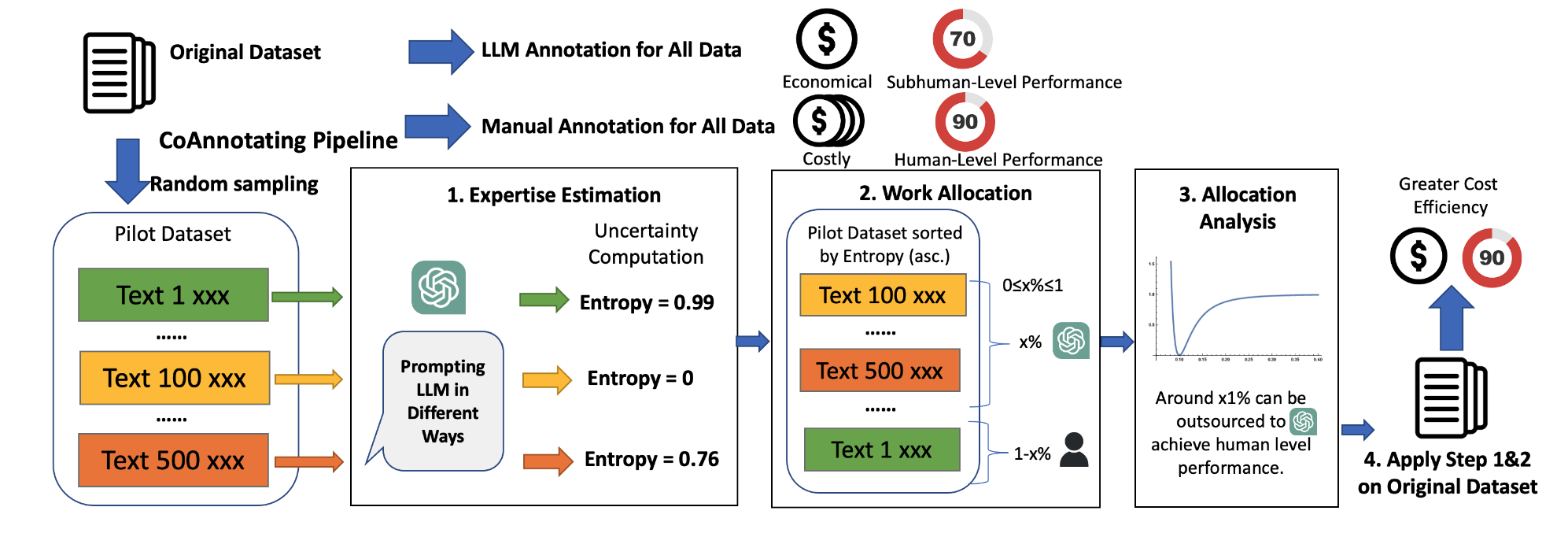

CoAnnotating: Uncertainty-Guided Work Allocation between Human and Large Language Models for Data Annotation

Abstract

Annotated data plays a critical role in Natural Language Processing (NLP) in training models and evaluating their performance. Given recent developments in Large Language Models (LLMs), models such as ChatGPT demonstrate zero-shot capability on many text-annotation tasks, comparable with or even exceeding human annotators. Such LLMs can serve as alternatives for manual annotation, due to lower costs and higher scalability. However, limited work has leveraged LLMs as a complementary annotator nor explored how annotation work is best allocated among humans and LLMs to achieve both quality and cost objectives. We propose CoAnnotating, a novel paradigm for Human-LLM co-annotation of unstructured texts at scale. Under this framework, we utilize uncertainty to estimate LLMs’ annotation capability. Our empirical study shows CoAnnotating to be an effective means to allocate work from results on different datasets.

BibTeX

@inproceedings{li2023coannotating,

title={CoAnnotating: Uncertainty-Guided Work Allocation between Human and Large Language Models for Data Annotation},

author={Minzhi Li and Taiwei Shi and Caleb Ziems and Min-Yen Kan and Nancy F. Chen and Zhengyuan Liu and Diyi Yang},

booktitle={Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing},

year={2023},

}